React.Js: Achieving 20ms server response time with Server Side Rendering and caching

We all love ReactJs for it’s rendering performance, modularity and the freedom it gives to choose your stack. But there is one thing that makes it stand out. Server side rendering. Before React, most of the javascript frameworks focused on building Single Page Applications and did a pretty great job at it. But now it’s 2016, the era of Hybrid Apps! Apps that can run beyond browser environment. React has started a trend by supporting server side rendering, which enables us to build end-to-end javascript apps.

Little Background

I am currently working on Practo’s Healthfeed, a place where doctors and health specialists write health articles and share their expertise. If not anything else, any blogging platform needs to be good at ONE thing the most: the SEO. Any blog with good SEO is destined to get more readers than anyone else. And more readers means more SEO !

To get better SEO you have to do a lot of things right. But here we’ll only talk the most important two, the most fundamentals for good SEO:

- Making your site crawlable for bots.

- Make it FAST. Like Millennium Falcon fast !

Enters React !

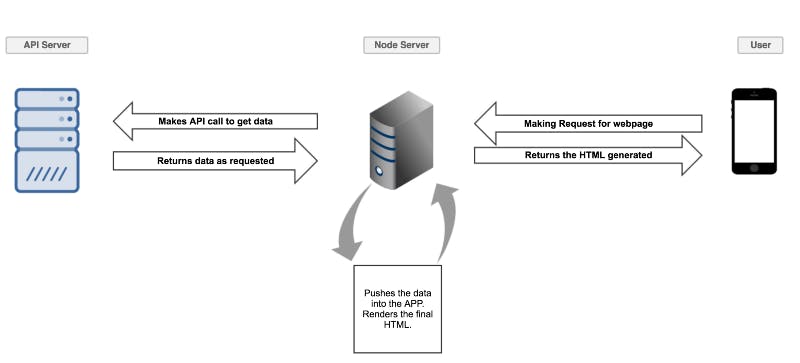

When React came out, one of it’s selling point was that it supported Server Side Rendering (SSR). To make your app support SSR, All you need is a node server and an API. The modern architecture looks something like this:

Notice ! The node server acts as a mediate person between the user and the API server. So the Flow goes like this:

- User hits the URL, Request goes to the Node Server.

- Node Server makes request to the API Server and takes the data from the API server.

- Pushes the data to the APP, which in return creates the final HTML for the user.

- Returns the HTML string back to the user.

Now the whole setup is done ! Server is taking requests, API is giving response and finally user/bots are getting a fully rendered HTML page. But this can turn out to be a user’s nightmare.

Issues !

- What if API server is slow! Like 500ms response time.

One problem with the server side rendering is that it’s response time relies heavily on the API server’s response time. That means, no matter how efficient and fast app you’ve made, the user will see the white screen for atleast 500ms, provided your node server has 0 ms response time. Which is practically impossible (for now).

So let’s see the breakdown here:

- 500 ms response time from API

- 150 ms for server side rendering (yes, it takes that much)

- 10 ms for node server

- 150 ms network latency

So a user will get response after almost 810ms ! Now, of course these are just average numbers, but in real world, it can be lot worse. Since we don’t have much control over the network latency, we’ll keep that out. So the server response time is currently at 660 ms.

To improve the situation, we’ll first catch the biggest fish: API response time.

Enters Redis !

Redis is one of the most powerful data-structure store, which is super fast and efficient. You can store anything in there as key value pairs. Integrating redis to store the node environment is super easy. If we store the API result in the redis, we can save our network trip to the API server.

So now, whenever the user makes some request, the node server will query the Redis if it already has the response. If it has, it’ll directly pass it to the app for rendering and finally return the HTML string. If it doesn’t have the response, we’ll go ahead and call the API and now store this result in the redis before passing it to the app.

The new breakdown will be:

- 150 ms for server side rendering

- 5ms for the redis server (NOTE: The rendering time might be less or very high, depending upon your application size.)

- 10 ms for node server

With just caching our API responses, we have dropped down from 660 ms to 160 ms.

Squeeze More !

Although 160 ms is good, we can get more by just a small trick.

Instead of storing the API response in redis, why not store the whole HTML string itself ?

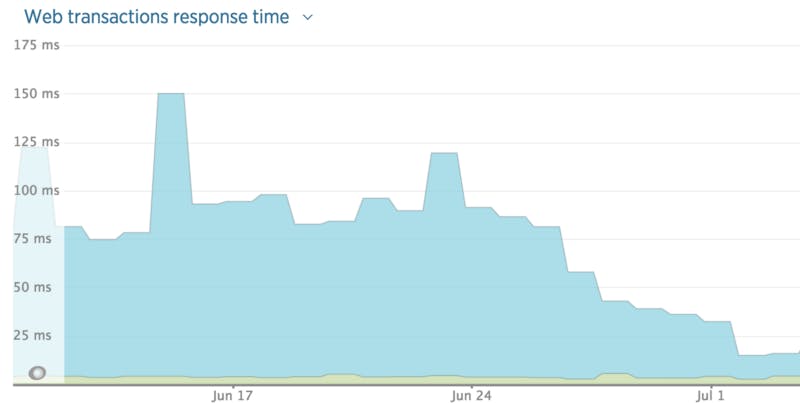

and this was the result !

The average response time fell to 20 ms !

Cache busting

If not handled properly, caching becomes a pain in the ass. People start constantly reporting older data/bugs become common. So with great cache, we also need a good cache busting strategy. Usually We have two cases where we have to clear the cache:

- When author updates the article. (When data updates happen)

When an author updates something, he wants to see the changes reflected immediately ! For this, we created a small param cache=false. Whenever the url is hit with this URL, the node server makes and API call instead of fetching data from the redis cache. And hence the cache is updated with the newer data.

- Whenever you deploy !

Whenever you deploy, new chunk hash for js and css files gets generated. This means if you’re storing the whole HTML string in the cache, it’ll become invalid with the deployment. Hence, whenever you deploy, the redis db needs to be flushed completely.

Conclusions

Node is fast. So is React ! But sometimes we need to add a new player to achieve that impossible goal without sacrificing any user experience points. Redis is awesome. Use all of these wisely.